Collaborative Learning Without Data Sharing: A Federated Learning Approach

Manish Kumar Saraf* and Geetika Saraf^

Most of the people think that the machine learning requires heavy CPUs, GPUs, cloud. What about the small device such as iPhone, Android phone, tablet, raspberry pi, square, Movidius, etc. Can we run machine learning in the smart devices and edges? In this article, I discussed various option to use the small device for machine learning purpose. You will get to know what is a distributed machine learning? How is federated learning useful for you to maintain the privacy in a connected world?

Data-sharing in healthcare

We need a large amount of high-quality data for building a machine learning (deep learning) model. Healthcare generates a huge amount of data every day. A recent study reported that the digital data may reach up to 44 zettabytes by 2020, which is almost double every year.

If the data size is increasing every year then why we are not able to build a good machine learning model. The answer is the lack of high-quality specific and annotated data. In some cases, the specific data are not available (or are very limited) from a single institute. The data annotation (labeling of medical data) requires expert knowledge. It restricts the option to use of commercial source for data annotation. Collaboration between the institute could share such challenges. In order to get sufficiently large data, the data are pooled from various organization and store in a central location.

Challenges with Data-sharing

Data sharing to a centralized may be a cumbersome process. It becomes more painful when we deal with international collaborations. Data sharing has various limitation. For example

o The setting up collaborations with a mutual agreement are sometimes a hard achieve.

o It is a very time-consuming process

o Multiple Legal issues

o IRB, HEPPA regulation

o Data privacy issue

o Technical constraints

o Data ownership challenge

Considering all these facts especially the legal issue, it is essential to sanitize the patient’s data from personally traceable items or find out the alternative of a centralized learning for building AI model without data sharing.

One alternative of data sharing is the shifting the centralization from data space to parameter space. Here the training is performed in the multiple devices located in different places and share the local trained model with a central server. The centralized server learns from the parameter rather than data itself.

Federation learning: A possible solution

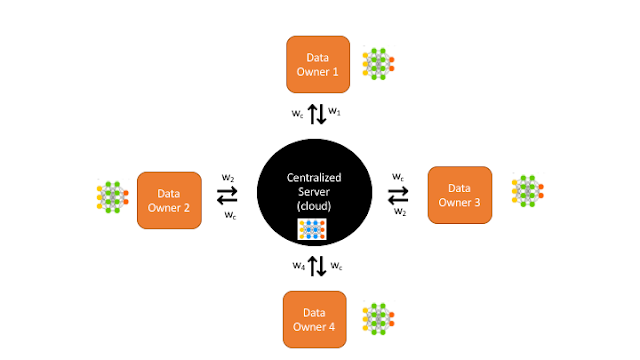

Federation learning is a very good example of parameterized learning. In Federation learning the owners locally build the AI model using own data and share the neural network model (W1 to W4) to the central server. The server collects the updated model from all owners and optimizes its own model. The new shared parameters (Wc) of an optimized model then send back to sends owners for further training.

|

| Figure 1: The architecture of federated learning |

Application of Federated Learning in Healthcare

Hospital, clinics and medical institutes are the best places to apply federated learning in the healthcare dataset. The approach solves many problems of data-sharing including data privacy issue. It increases the efficiency and saves a lot of computation cost. Sheller and his colleagues tested the concept of federated learning to build a deep learning model without sharing the data amongst in multi-institute collaborators. The performance of federated learning model (without data sharing) was very much similar to the data sharing model, suggesting the federation learning as an alternative method for building AI model without compromising data privacy (Sheller, Reina, Edwards, Martin, & Bakas, 2018).

Limitation of Federated Learning

Federated learning is not applicable where a large training data is required to build the AI model. For example, in the small devices, the amount of data is limited. So, it would be difficult to find the good accuracy with a small dataset. Moreover, we can't solve a large number of AI problem in the same device.

Contact

We are running a project of collaborative federated learning. If you want to collaborate or want more information, please contact me at saraf@databrain.pro

* AI-Expert and Cofounder of DataBrain.

^ Software Developer/Engineer

References

Corbacho Jose. (2018). Federated Learning – Bringing Machine Learning to the edge with Kotlin and Android. Retrieved October 7, 2018, from https://proandroiddev.com/federated-learning-e79e054c33ef

Konečný, J., McMahan, H. B., Yu, F. X., Richtarik, P., Suresh, A. T., & Bacon, D. (2016). Federated Learning: Strategies for Improving Communication Efficiency. Retrieved from https://ai.google/research/pubs/pub45648

Rodriguez Jesus. (2018). What’s New in Deep Learning Research: Understanding Federated Learning. Retrieved October 7, 2018, from https://towardsdatascience.com/whats-new-in-deep-learning-research-understanding-federated-learning-b14e7c3c6f89

Sheller, M. J., Reina, G. A., Edwards, B., Martin, J., & Bakas, S. (2018). Multi-Institutional Deep Learning Modeling Without Sharing Patient Data: A Feasibility Study on Brain Tumor Segmentation. AI Intel, September, 1–13. Retrieved from https://ai.intel.com/ai/wp-content/uploads/sites/69/Multi-Institutional-DL-Modeling-wo-Sharing-Patient-Data.pdf

Vinzelles Gabriel de. (2018). Federated Learning, a step closer towards confidential AI. Retrieved October 7, 2018, from https://blog.otiumcapital.com/otium-neural-newsletter-1-federated-learning-a-step-closer-towards-confidential-ai-efe28832006f

Comments

Post a Comment